What is HPC?

HPC(High-Performance Computing) is Like a supercomputer In which two or more computer Server are

connected via high-speed Network media(optical Fiber cable and Infiniband switch) and each computer

is called Node and “High-Performance Computing” (HPC) is a collection of many isolated servers

(Computers) and they can be viewed as a single system.

It’s a computer with modern processing capacity especially the fastest speed of calculation and

accessible memory. In HPC A large number of the dedicated processor are used to resolve intensive

computational tasks.High-performance computing (HPC) uses supercomputers and computer clusters to

solve advanced computation problems. Today, computer systems approaching the teraflops region are

counted as HPC-computers. HPC integrates systems administration and parallel programming into a

multidisciplinary field that combines digital electronics, computer architecture, system software,

programming languages, algorithms, and computational techniques. HPC technologies are the tools and

systems used to implement and create high-performance computing systems.HPC is also called computer

cluster refers to a group of servers working together on one system to provide high performance and

availability over that of a single computer while typically being more cost-effective than single

computers of comparable speed or availability.HPC plays an important role in the field of

computational science and HPC is a powerful technique are used for a wide range of computationally

intensive tasks in various fields, including Bio-Science (biological macromolecules), Weather

forecasting, chemical Engineering(computing the structures and properties of chemical Molecular

design, Oil and gas exploration, Mechanical design(2D & 3D design verification) and physical

simulations(such as simulations of the universal activity like early moments of the Universe, the

detonation of nuclear weapons and nuclear fusion).

What is the AGASTYA-HPC?

A Typical HPC Cluster can be employ two or more server, all working together to execute High

performance Job, HPC system based on Intel and/or AMD CPUs, running a Linux platform and each server

architecture is quite plane and each server has its own hardware: Network, Memory, storage and

processing CPUs and each server are interconnected with copper and fiber cables.

The “AGASTYA” HPC can conduct 256 Teraflops of

operations using CPU-CPU based parallel computing. This cluster (of computing

nodes) also has 8 GPUs (Tesla V100), with each of them capable of 7

Teraflops of operations per second. The facility comes with 800 TB of storage

space . The cluster supports data transfer with an overwhelming speed of 100 Gbps. With

a dedicated UPS of 210 KVA with a three-phase power supply, we are committed to providing the

institute an uninterrupted computing capability. In summary, AGASTYA stands within 20 of the best

computing facilities available in the country.The AGASTYA-HPC relies on parallel-processing

technology to offer AGASTYA researchers an extremely fast solution for all their data processing

needs.

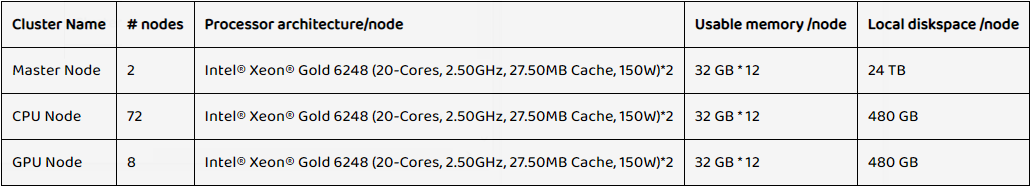

A set of different compute clusters in AGASTYA-HPC. For an up to date list of all clusters and their

hardware are following -:

Total Storage:804 TB

What Is Not Agastya HPC?

1-Personal Desktop: Agastya is Not a replacement of the personal desktop even if it can do most of

their jobs. It is a cluster of computer servers that can be utilized for computational jobs that a

usual desktop will either Not able to execute or will take too long to make the execution

practically useless.

2-develops your applications.

3-HPC Not for the playing games.

4-answers your query.

5-Thinks like you.

6-Runs your PC-applications much faster for bigger problems.

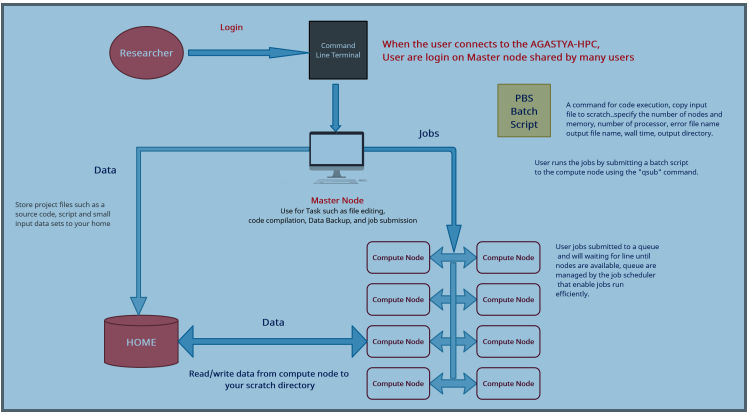

AGASTYA-HPC Architecture

Technical Stuff of AGASTYA-HPC

Batch or interactive mode?

In real time a HPC system have ability to run multiple programs in parallel without any User

interaction this is called

Batch Mode.

When a program run at the HPC as needed User interaction for give some input like enter

Input data and press any key so this kinds of process is called

Interactive

mode. So in that case user interaction is needed and the computer will wait for

user input. The available computer resources will Not be used in those cases.

Interactive mode is normally useful in Data visualization Jobs.

What is Parallel or sequential programs?

Parallel program is a form of computation in which many program executing

simultaneously. In Parallel program execution have large program divide into sub small

program jobs, which are execute concurrently. Parallel computing is the simultaneous use of

multiple processing units to solve a computational problem

Parallel computer can be installing with multicore computer having multiple processing units

with in single machine so that multiple computer to work on the same task in a cluster.

Parallel computing has played important role in HPC cluster in the form of multicore

processor.

In

Sequential Program A single-processor computer that executes one

instruction at a time and It does Not do calculations in parallel, i.e., it only uses one

core at a time of single node means it can only use one core; it does Not become faster by

just providing more cores at it.

But it also possible to run multiple instances of program with different input parameter on

the HPC, This is just additional form of execution of sequential programs in parallel.

What are Core, Processor, and Node?

In HPC, Server deNoted to as Node and each Single Node (Server) contain one or more socket

and each socket contain multicore processor which consists from multiple CPU or cores that

are used to run HPC Program.

HPC Stack

HPC Stack is a cluster components’ stack which explains about different components which uses

in HPC cluster implementation and its dependencies on one aNother.

Libraries

Library is a collection of resources used to develop software. These may include prewritten

code and subroutines, classes, values or type specifications. Libraries contain code and

data that provide services to independent programs. This allows the sharing and changing of

code and data in a modular fashion. Some executable are both standalone programs and

libraries, but most libraries are Not executable. Executable and libraries make references

known as links to each other through the process known as linking, which is typically done

by a linker. Here in HPC Stack, libraries mean development libraries both serial and

parallel which are associated with compilers and other HPC programs to run jobs. Originally,

only static libraries existed. A static library, also known as an archive, consists of a set

of routines which are copied into a target application by the compiler, linker, or binder,

producing object files and a standalone executable file. This process, and the stand-alone

executable file, is known as a static build of the target application. Actual addresses for

jumps and other routine calls are stored in a relative or symbolic form which canNot be

resolved until all code and libraries are assigned final static addresses. Dynamic linking

involves loading the subroutines of a library into an application program at load time or

run-time, rather than linking them in at compile time. Only a minimum amount of work is done

at compile time by the linker; it only records what library routines the program needs and

the index names or numbers of the outlines in the library.

Dynamic libraries almost always offer some form of sharing, allowing the same library to be

used by multiple programs at the same time. Static libraries, by definition, canNot be

shared. With Centos 7.8 on Cluster, glibc is installed for OS and developmental activities.

The GNU C Library, commonly known as glibc, is the C standard library released by the GNU

Project.

Originally written by the free software Foundation (FSF) for the GNU operating system, the

library's development has been overseen by a committee since 2001, with Ulrich Dripper from

Red Hat as the lead contributor and maintainer.

Compilers

A compiler is a computer program (or set of programs) that transforms source code written in

a programming language (the source language) into aNother computer language (the target

language, often having a binary form known as object code). The most common reason for

wanting to transform source code is to create an executable program. The GNU Compiler

Collection (GCC) is a compiler system produced by the GNU Project supporting various

programming languages. GCC is a key component of the GNU tool chain. As well as being the

official compiler of the unfinished GNU operating system, GCC has been adopted as the

standard compiler by most other modern Unix-like computer operating systems, including

Linux, the BSD family and Mac OS X. Originally named the GNU C Compiler, because it only

handled the C programming language, GCC 1.0 was released in 1987, and the compiler was

extended to compile C++ in December of that year. Front ends were later developed for

FORTRAN, Pascal, Objective-C, Java, and Ada, among others.

Scheduler

A job scheduler is a software application that is in charge of unattended background

executions, commonly known for historical reasons as batch processing. Synonyms are batch

system, Distributed Resource Management System (DRMS), and Distributed Resource Manager

(DRM). Today's job schedulers typically provide a graphical user interface and a single

point of control for definition and monitoring of background executions in a distributed

network of computers.

For this Cluster resources manager is PBSPro. It is a open-source HPC job scheduler. The

PbsPro for Resource Management is an open source, fault-tolerant, and highly scalable

cluster and job scheduling system for large and small Linux clusters. PBSPro requires no

kernel modifications for its operation and is relatively self-contained.

Scheduler

A job scheduler is a software application that is in charge of unattended background

executions, commonly known for historical reasons as batch processing. Synonyms are batch

system, Distributed Resource Management System (DRMS), and Distributed Resource Manager

(DRM). Today's job schedulers typically provide a graphical user interface and a single

point of control for definition and monitoring of background executions in a distributed

network of computers.

For this Cluster resources manager is PBSPro. It is a open-source HPC job scheduler. The

PbsPro for Resource Management is an open source, fault-tolerant, and highly scalable

cluster and job scheduling system for large and small Linux clusters. PBSPro requires no

kernel modifications for its operation and is relatively self-contained.

MPI

Message Passing Interface (MPI) is an API specification that allows processes to communicate

with one aNother by sending and receiving messages.

MPI is a language-independent communications protocol used to program parallel computers.

Both point-to-point and collective communication are supported. MPI "is a message-passing

application programmer interface, together with protocol and semantic specifications for how

its features must behave in any implementation." MPI's goals are high performance,

scalability, and portability. MPI remains the dominant model used in high- performance

computing today.

We are using Intel MPI also. Intel® MPI Library is a multi-fabric message passing library

that implements the Message Passing Interface, version 3.1 (MPI-3.1) specifications. Use the

library to develop applications that can run on multiple clusters interconnects.

In “AGASTYA” HPC what programming language did we use?

In HPC we can use any programming language, any library and software package provided that

run on Linux, specifically, on the version of Linux that is installed on the compute nodes,

Centos 7.8.

For the most common programming languages, a compiler is available on CentOS 7.8.

Supported and common programming languages on the HPC are C/C++, FORTRAN, Java, Perl,

Python, MATLAB, etc. Supported and commonly used compilers are GCC and Intel.

Note -: Additional software can be installed “on demand”. Please contact the

“AGASTYA” HPC support to specific requirements.

“AGASTYA” HPC Support Mail ID – “hpc.support@iitjammu.ac.in”

In “AGASTYA” HPC what operating systems did we use?

All nodes in the “AGASTYA” HPC cluster run under

CentOS-7.8 which

is a specific version of Red Hat Enterprise Linux. This means that all programs (executable)

should be compiled for CentOS-7.8.

Users can connect from any computer in the “AGASTYA” network to the HPC, irrespective of the

Operating System that they are using on their personal computer. Users can use any of the

common Operating Systems (such as Windows, mac-OS or any version of Linux/Unix/BSD) and run

and control their programs on the HPC.

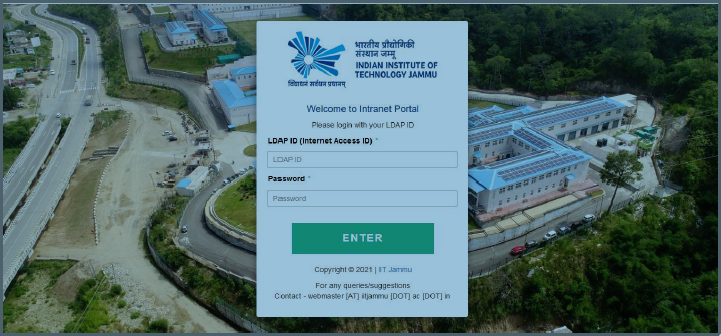

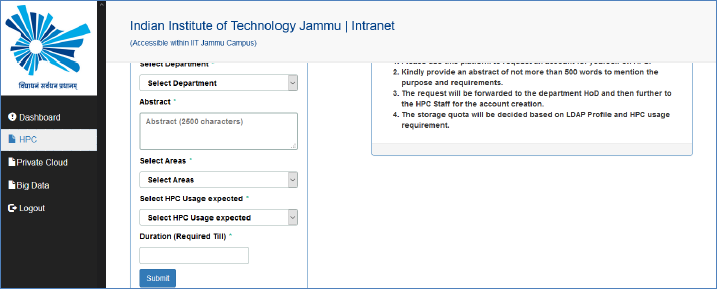

How To Get An Acount on "AGASTYA-HPC"?

user request

First request for the new user account through given dedicated link portal on the IIT Jammu

intranet -:Link - https://intranet.iitjammu.ac.in

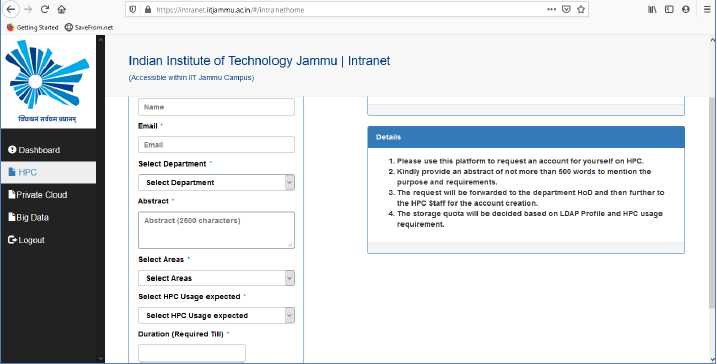

Fill The Details

And fill the required detail after open the link and click on submit button for

request submission.

After final approval you will get the user ID with private key (.Pem file) on your mail ID.

Then you have to download the “private key” and put into your local system from where you

will access to HPC.

Note :-In AGASTYA-HPC cluster we use public/private key

pairs for user authentication (rather than pass-words).

How Do We Connect AGASTYA-HPC?

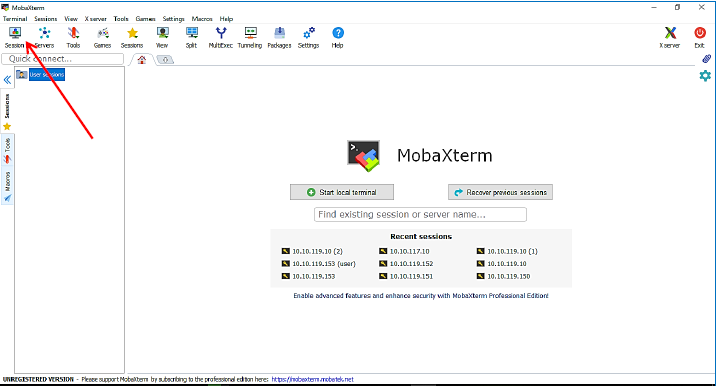

Windows Access On Campus

User need to have own private key.If User use window based Operating system then they have to

use “SSH

client tools” (Putty, MobaXterm, SecureCRT, mRemoteNG) so here we

are connect to HPC through “MobaXterm” so we follow the following

steps -:

1-So first we have to download “MobaXterm” tools from Internet in our local system and then

run the exe.

2-After run the exe “MobaXterm” interface will open.

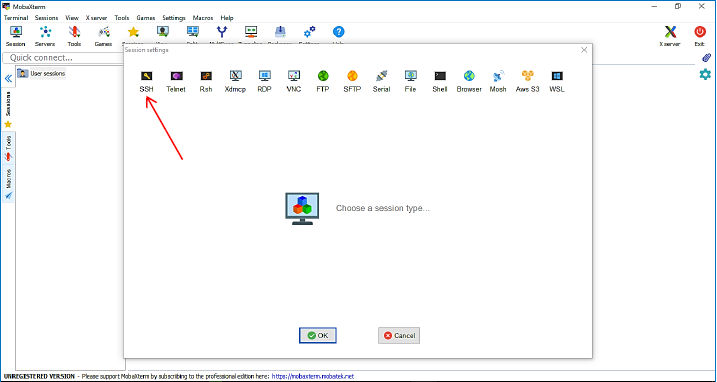

3-Click on session then next screen will be show then click on ssh for enter the login

IP.

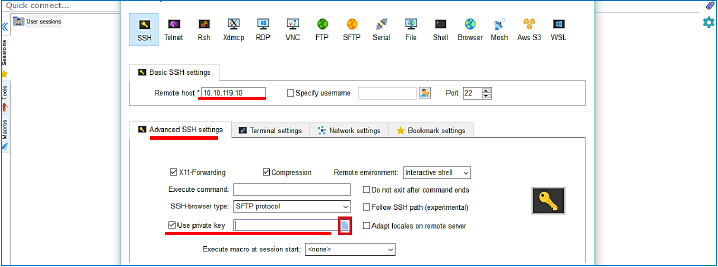

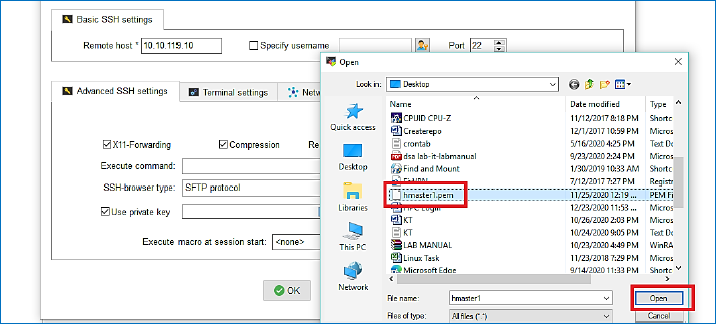

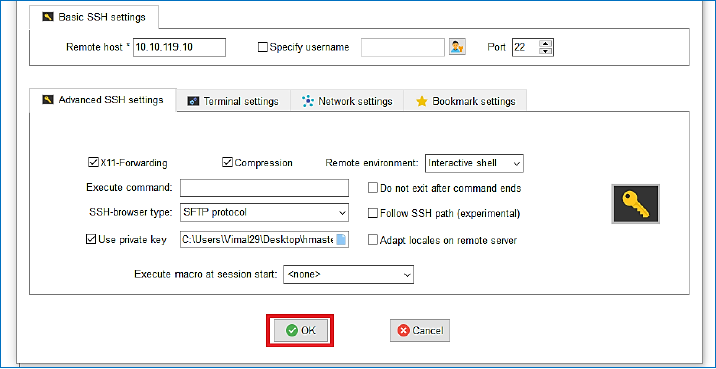

4-Then enter the IP on remote host field and click on Advance SSH

setting option and then select the private key option and select

the path of pem file.

5-Then click on ok.

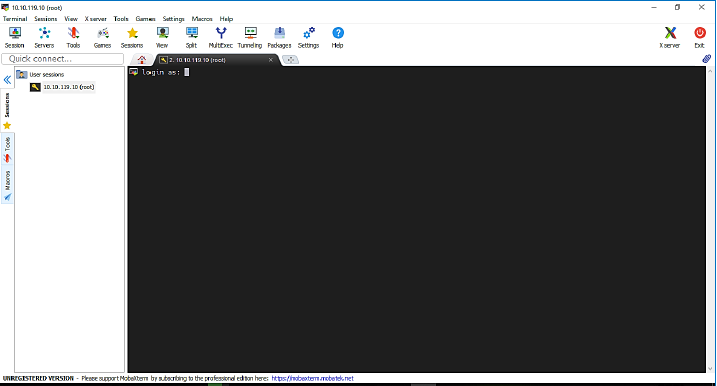

6-Then login screen will be show

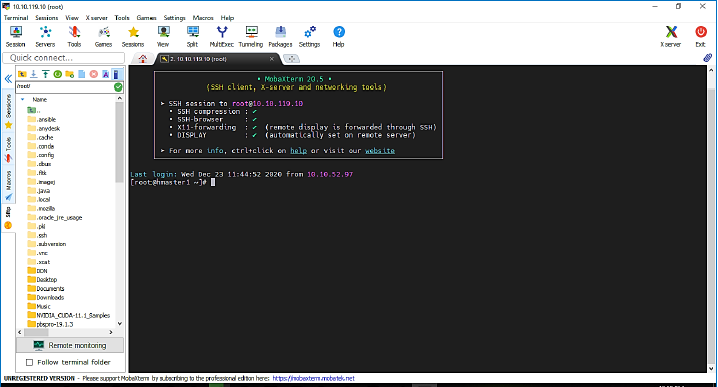

7-Here User will enter login User name. After enter login Name,

User will login successfully.

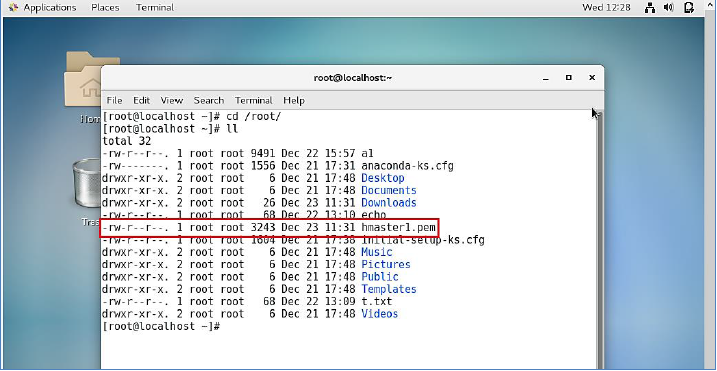

Unix Access On Campus

If User working on UNIX based operating system then User have to

follow the below step.

1-The First, User will Login from Linux system then downloads the

pem file (given by the hpc.support) in our local Directory.

2-After download the pem file then user will open terminal and now

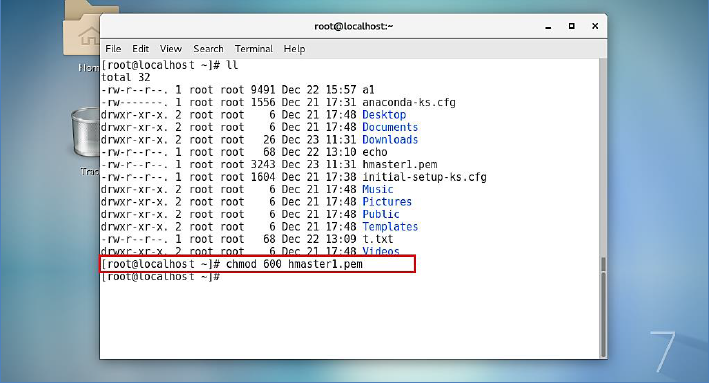

user have to change the permission from 644 to 600 using

following command.

# chmod 600 hmaster1.pem

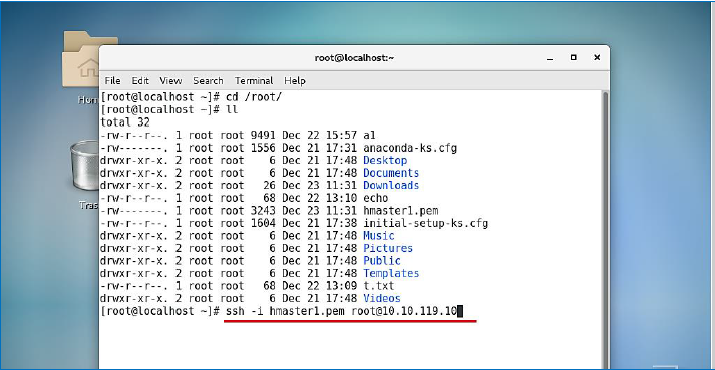

3-Then on same location we will run the following command for SSH

login.

# ssh -i hmaster1.pem username@10.10.119.10

Note -: at username place user have to write own user name

provided by the hpc.support

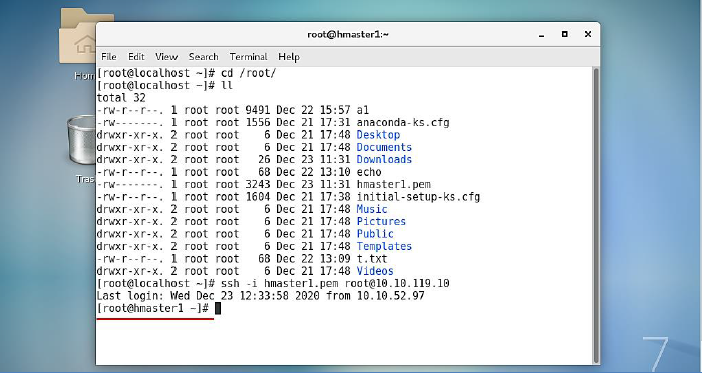

4-After press enter button then first time system ask continue

connecting then user have to write yes then User will login

successfully.

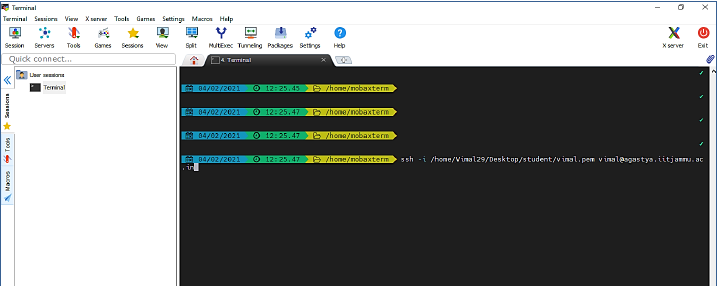

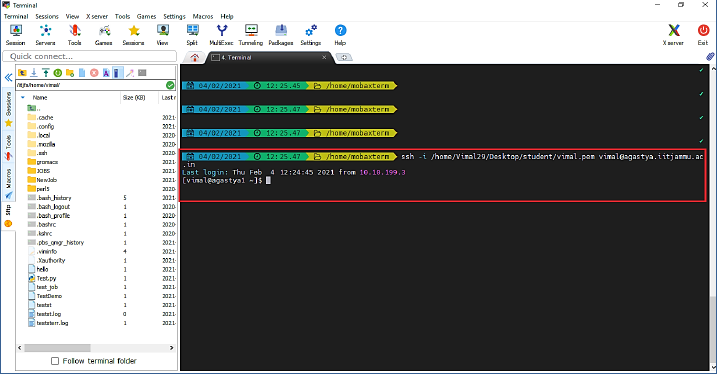

Windows Access Off Campus

User need to have own private key.If User use window based Operating system from

outside the campus

then they have to use “SSH client tools” (Putty, MobaXterm,

SecureCRT, mRemoteNG) so here we are connect to HPC through

“MobaXterm” so we follow the following steps -:

1-So first we have to download “MobaXterm” tools from Internet in

our local system and then run the exe.

2-After run the exe “MobaXterm” screen will open.

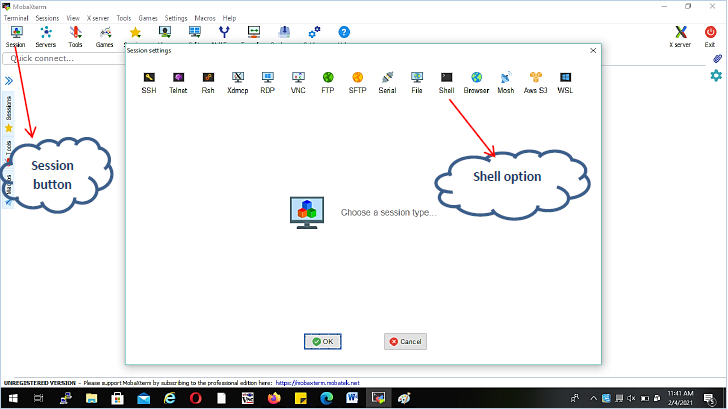

4-Then user will click on session button then session screen will

open.

5-Then User will click on shell button so next shell window will

open.

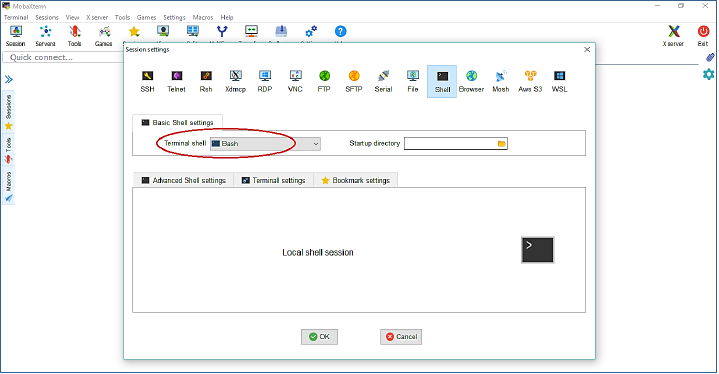

6-Here user can select terminal shell by default Bash shell

selected so here user will click on ok button then next Bash

command line terminal will open.

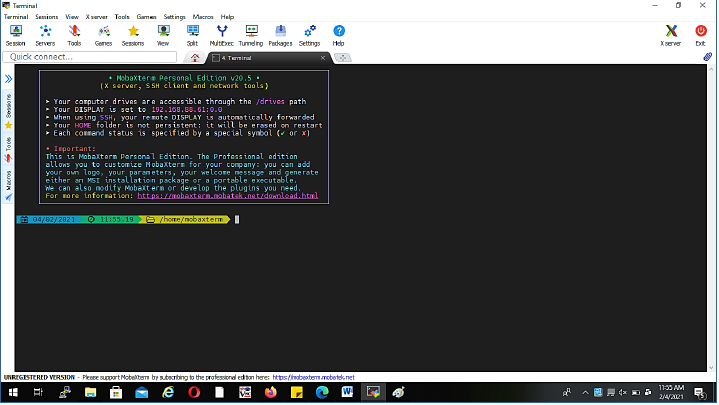

7-After that User will type following command with pem file path

on terminal then press enter.

Syntax - #ssh -i pemfilePath username@agastya.iitjammu.ac.in

# ssh -i /home/Vimal29/Desktop/student/vimal.pem

vimal@agastya.iitjammu.ac.in

Note -: In above pem file path is

“/home/Vimal29/Desktop/student/vimal.pem” but in your case it

should be different.

Note -: In above pem file path is

“/home/Vimal29/Desktop/student/vimal.pem” but in your case it

should be different.

8-Then User will successfully login on AGASTYA-HPC

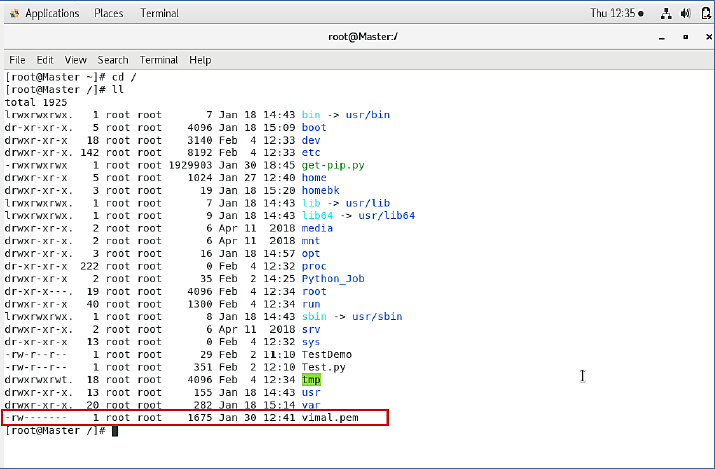

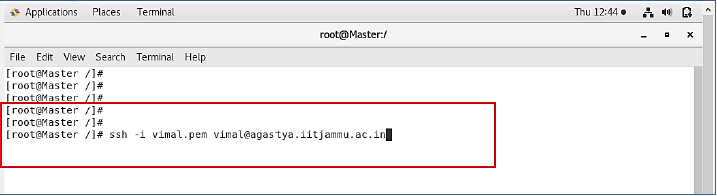

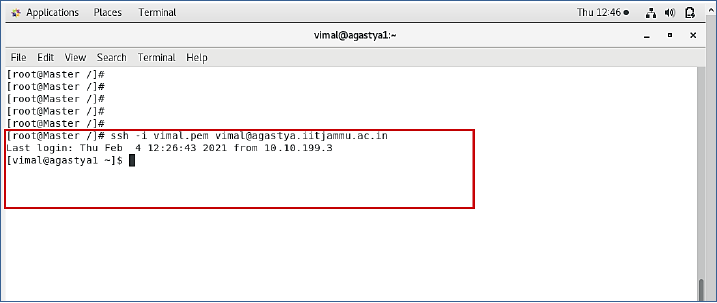

Unix Access Off Campus

If User working on UNIX based operating system then User have to

follow the below step.

1-The First, User will Login from Linux system then downloads the

pem file (given by the hpc.support) in our local Directory.

2-After download the pem file then user will open terminal and now

user have to change the permission from 644 to 600 using

following command.

chmod 600 hmaster1.pem

3-Then on same location we will run the following command for SSH

login.

Syntax - #ssh -i pemfile user_name@agastya.iitjammu.ac.in

# ssh -i vimal.pem vimal@agastya.iitjammu.ac.in

Note -: at username place user have to write own user name

provided by the hpc.support

4-After press the enter button then first time system will ask

continue connecting then user has to write yes then User will

login successfully.

DATA-ACCESS In HPC

DATA-ACCESS Using SCP

from Local Machine to HPC

If User wants to transfer file from a Local Machine to HPC then they will use the following

command

#scp -i (pemfileName) (Source file Path) user@HPC_IP:/(Destination

Path)

EXAMPLE

#scp -i 2021PCT1070.pem TestDemo 2021PCT1070@10.10.119.10: /home/gautam

from HPC to Local Machine

So If User wants to transfer file from HPC to a Local Computer then they will use the

following command

# scp -i (pem file Name) user@HPC_IP:(SourcePath)

/(DestinationPath)

EXAMPLE:-

#scp -i 2021PCT1070.pem 2021PCT1070@10.10.119.10:/home/gautam/hello /root/

DATA-ACCESS Using SFTP

from Local Machine to HPC

If User wants to transfer file from a Local Machine to HPC then they will use the

following

command

#scp -i (pemfileName) (Source file Path) user@HPC_IP:/(Destination

Path)

EXAMPLE:

#scp -i 2021PCT1070.pem TestDemo 2021PCT1070@10.10.119.10: /home/gautam

from HPC to Local Machine

So If User wants to transfer file from HPC to a Local Computer then they will use the

following command

# sftp -i (pem file Name) user@HPC_IP:(SourcePath)

/(DestinationPath)

EXAMPLE:

#sftp -i 2021PCT1070.pem 2021PCT1070@10.10.119.10:/home/gautam/hello /root/

Job Scheduler in HPC

To run the jobs in HPC, the User required Job scheduler with a resource manager so that the user can

use maximum resources of HPC and get the result in minimum time so first, we will define the

required some technical stuff during job running on HPC.In HPC to have access to the compute nodes

of a cluster, we have to use the job system so In AGASTYA-HPC for access to the, all nodes of a

cluster have used "Portable Batch System Professional (PBS Pro) version

19.1.3.” And for the use of job scheduler first we have to write a pbs

jobs script.so that User can select resources of HPC like number of processor, number

of nodes, memory, wall time etc.

So pbs jobs script example are following -:

1-#!/bin/bash

2-#PBS N test_job (test_job is user sample job name)

3-#PBS -l nodes=1:ppn=1 (Requests for 1 processors on 1 node. )

4-#PBS –q queue name

5-#PBS -l mem=5gb (Requests 5 GB of memory in total)

6-#PBS o outtest.log

7-#PBS e Error.log

8- $PBS_NODEFILE>nodes

9-mpirun -machinefile $PBS_NODEFILE -np 20 executable-binary input--file

Module

In HPC, Using modules, each user has control over their environment, and using modules User can work

with different versions of software without risking version conflicts.So in HPC all software package

are activate and deactivate through module so the first users has to know about the all following

module command

To Check Available Module List: #module avail

How To Load Module: #module load module-name

How To Check Loaded Module List: #module list

How To Unload Loaded Module: #module unload module-name

How To Unload All Loaded Module Once: #module purge

How To Get The Details Of Module: #module help Or #module help module-name

How To The Module Environment Details:#module show module-name

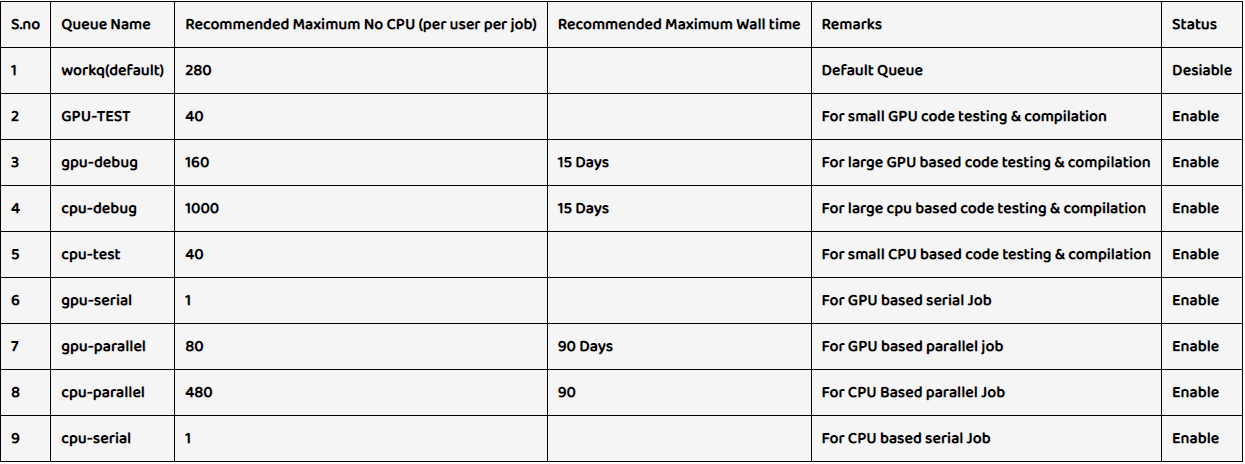

Queue In Agastya HPC

In AGASTYA-HPC following Queue are available so the user can use all given Queue in PBS Script

Parameter

Job Scheduler Command

- Submit the job to the scheduler by : - $qsub script_name

- check the jobs status by :- $qstat

- check where the job are running :- $qstat –n

- check full information of the job :- $qstat –f JOB_ID

- delete the job from the queue :- $qdel $job_id (it may take 5 to 10 seconds)

- check the queue information :- $qstat –Q

- List all jobs and their state :- $qstat –a

- List all running jobs :- $qstat –r

Get Information On Your Cluster

- List offline and down nodes in the cluster :- pbsnodes –l

- List information on every node in the cluster :- pbsnodes –a

Job Execution Steps

- Copy Input file from local system to HPC (procedure given in above chapter-3)

- Write pbs script file (sample pbs job script file mentation in above steps)

- In pbs script file user have to load required module.

- Run the script using “qsub” command qsub pbsjob_scrip

- output will save in working directory

- User can download output from HPC to the local system (procedure given in above chapter-3).

HPC Job Example

MATLAB

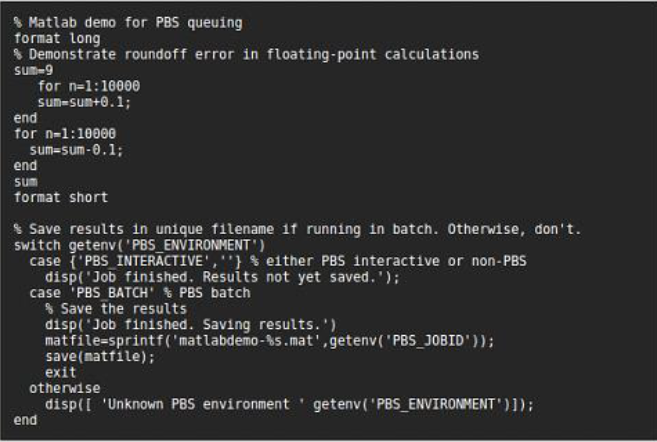

Any MATLAB .m file can be run in the queue. The -r command flag for MATLAB will cause it to

run a single command specified on the command line. For proper batch usage, the specified

command should be your own short script. Make sure that you put an exit command at the end

of the script so that MATLAB will automatically exit after finishing your work. In the

example given below, debugging runs of the program on a workstation or in interactive queue

runs will print a message when the job is finished, and unattended batch runs will

automatically save results in a file based off the job ID and then exit. Failure to include

the exit command will cause your job to hang around until its wall clock allocation is

expired, which will keep other jobs in the queue longer, and also tie up MATLAB licenses

unnecessarily.

Example-1: Sample MATLAB (matlabdemo.m)

PBS Jobs Script for Matlab

Single Core

#!/bin/bash -l

#PBS -q batch

#PBS -N NetCDF_repeat

#PBS -l nodes=1:ppn=1

#PBS -l walltime=100:00:00

#PBS -o out.txt

#PBS -e err.txt

cd $PBS_O_WORKDIR

module load matlab

matlab -nodesktop -nosplash -r running_monthly_mean &> out..lo

Multicore

#!/bin/bash -l

#PBS -q batch

#PBS -N parallel_matlab

#PBS -l nodes=1:ppn=6

#PBS -l walltime=100:00:00 cd

$PBS_O_WORKDIR

module load matlab

matlab -nodesktop -nosplash -r my_parallel_matlab_script &> out..log

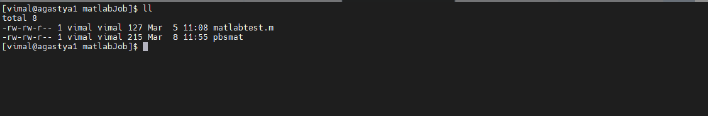

Example of MATLAB with PBS

I have simple matlab program “matlabtest.m” are -:

Matlabtest.m

Matlabtest.m

a=4;

b=6;

c=a+b

fprintf ('%i',c)

so run above program with pbs scheduler, I use following pbs script name

“pbsmat” pbsmat

#!/bin/bash

#PBS -N pbsmat

#PBS -l nodes=20:ppn=40

#PBS -l mem=2gb

#PBS -q cpu-test

cd $PBS_O_WORKDIR

module load codes/matlab-2020

matlab -nodisplay -nodesktop -nosplash -r "run ./matlabtest.m"

Now for run the script we write following command -:

#qsub pbsmat

Now we check our job status with following command -:

#qstat –an

Example of GAUSSION with PBS

#!/bin/bash

#PBS -N g16test

#PBS -l nodes=1:ppn=40

#PBS -l mem=20gb

#PBS -q cpu-test

cd $PBS_O_WORKDIR

source /etc/profile.d/gaussian.sh

g16 /home/vimal/GAUSSIAN/Methane-opt-s.gjf

Now for run the script we write following command -:

#qsub g16test (g16test is the above job script name)

Example of ANSYS with PBS

#!/bin/bash

#PBS -N pbsmat

#PBS -l nodes=1:ppn=16

#PBS -l mem=200gb

#PBS -q cpu-test

export ANS_FLEXLM_DISABLE_DEFLICPATH=1

export ANSYSLMD_LICENSE_FILE=1055@10.10.100.19:1055@agastya1

export ANSYSLI_SERVERS=2325@10.10.100.19:2325@agastya1

cd $PBS_O_WORKDIR

echo $PBS_NODEFILE > out.$PBS_JOBID

cat $PBS_NODEFILE >> out.$PBS_JOBID

export ncpus=`cat $PBS_NODEFILE | wc -l`

dos2unix *.jou

/apps/codes/ANSYS/ansys_inc/v202/fluent/bin/fluent 2ddp -g -SSH -pinfiniband

-mpi=default

-t$ncpus -cnf=$PBS_NODEFILE -i $PBS_O_WORKDIR/run.jou >> out.$PBS_JOBID

Note -: in above run.jou file have some instruction has given for the case

file and

data file

(this file also create by the user with pbs job script file)

/file confirm no

/server/start-server ,

rc init_SS_SST_kw_sigma_0pt2_ita_1e11_54826_elements.cas.h5

rd init_SS_SST_kw_sigma_0pt2_ita_1e11_54826_elements.dat.h5

it 10000

wcd tmp.cas.h5

/server/shutdown-server

exit yes

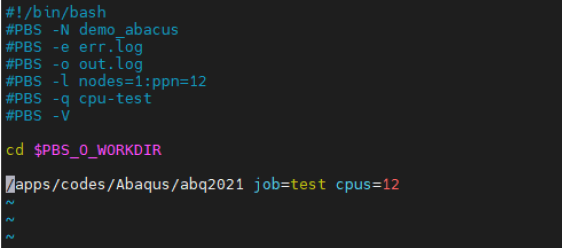

Example of ABAQUS with PBS

Note -: In Above code User can change number of nodes and processor value

according to

the queue configuration.

Note -: In Above code User can change number of nodes and processor value

according to

the queue configuration.

HPC Usage Policy

Storage Quota Policy

- In AGASTYA-HPC every users are provided a fix space quota with soft limit of 2 TB to Hard limit

till 3 TB and grace period of 7 days to store their result.

- This disk space is provided to run your program in your home directory.

- If a user passes the soft limit of quota space then User will get only 7 days to utilize cross

the soft limit of space quota after that job will stop or Not run.

- If HPC Users utilize more than their allocated space quota then they may Not be able to run the

jobs from their home directory until they clean their space and reduce their usage, or they can

also request for additional storage with proper justification, which may be allocated to them,

subjected to the availability of space.

- If users required more storage space then he has to raise the request to HPC In charge.

- User can store the data only for the computation. Do Not use for the store backup of your data.

- Kindly compress your data, if Not used in currently but may be used in future.

- HPC User data will be deleted for-

- Normal User - after 15 days of their account expiry.

- Faculty – After 1 month of retirement date.

- Other scientific staff – After two month of their account expiry

- It is the user responsibility to take their data out or to take proper approval for extending

their account expiry date from HPC authority.

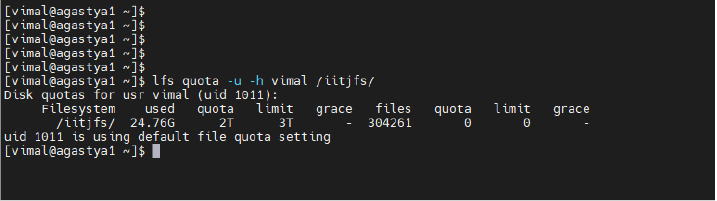

User can also check own available quota size in AGASTYA-HPC with following command.

# lfs quota –u –h username /iitjfs

User Account Deletion Policy

In AGASTYA-HPC, User account will be suspended for-:

1-Normal User - after 1 month of their account expiry.

2-Faculty – After 2 month of retirement date.

3-Other scientific staff – After three month of their account expiry